About Docker

Docker is a platform for building, shipping, and running applications in containers. Containers are lightweight, portable, and self-contained units of software that can run reliably and consistently across different environments. Docker provides a way to package an application and its dependencies into a single container, which can be easily deployed and managed.

With Docker, developers can build and test applications locally on their development machine, and then package them into containers that can be deployed to any production environment. This makes it easy to move applications between development, testing, and production environments, without worrying about compatibility issues or differences in the underlying infrastructure.

Some of the benefits of using Docker include:

Consistency: Containers provide a consistent runtime environment, ensuring that applications run the same way across different environments.

Efficiency: Containers are lightweight and share the host operating system, which means they require less resources than traditional virtual machine.

Portability: Containers can be easily moved between different environments, such as from a developer's laptop to a production server.

Security: Containers provide a level of isolation between applications and the host system, which can help prevent security vulnerabilities from spreading.

Overall, Docker is a powerful tool for building and deploying applications in a consistent, efficient, and portable way.

Difference between Virtual Machine and Docker

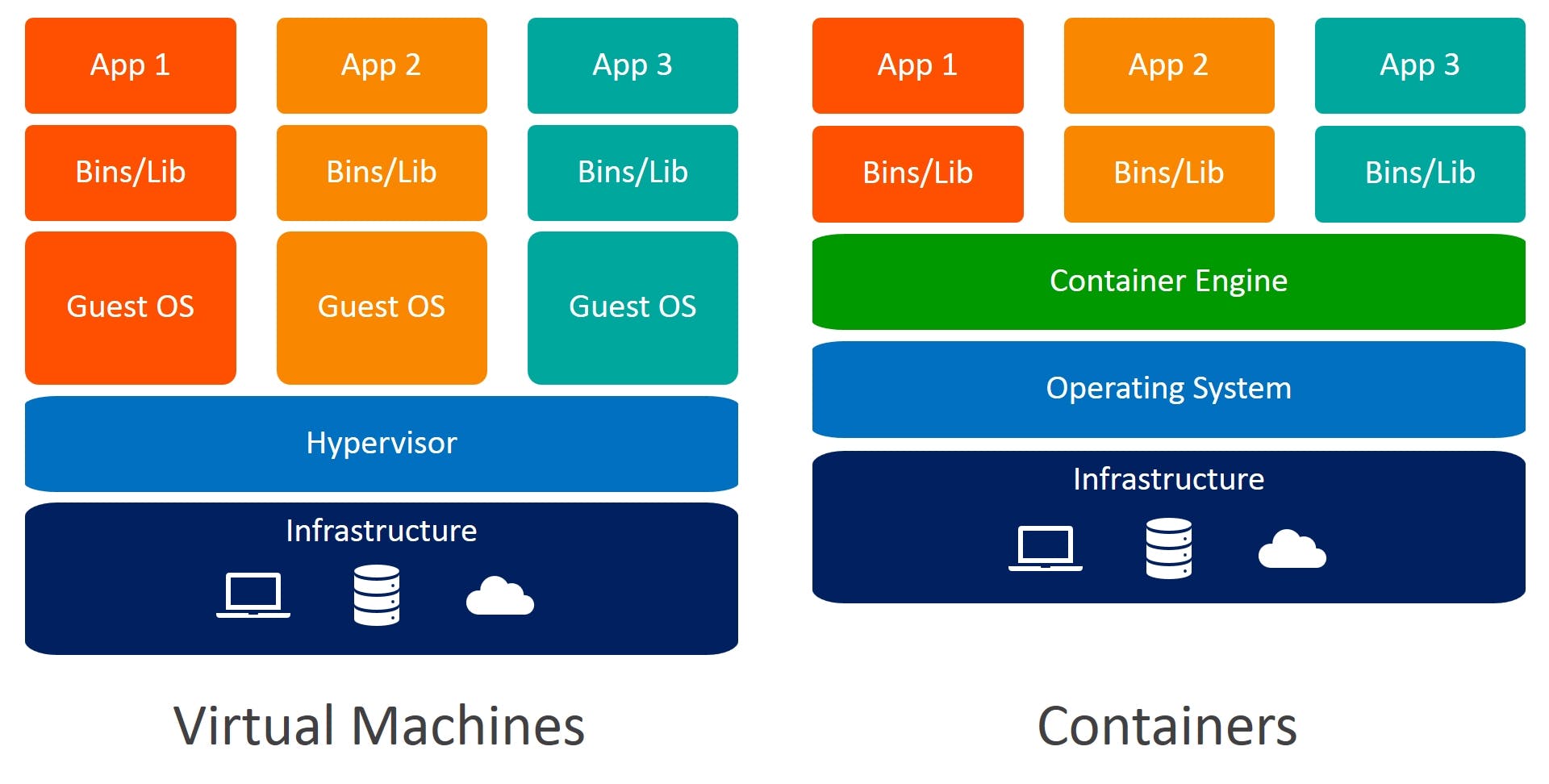

Virtual machines and Docker are two technologies used to create isolated environments for running applications, but they differ in several ways:

1 - Resource usage: Virtual machines require a complete operating system, which can be resource-intensive. Docker containers, on the other hand, share the host operating system's kernel, resulting in lower resource usage and faster startup times.

2 - Isolation: Virtual machines provide full isolation between the guest operating system and the host operating system, while Docker containers provide lighter isolation between the container and the host system.

3 - Portability: Virtual machines can be moved between different hypervisors or cloud providers, but this can be complex and time-consuming. Docker containers are highly portable and can be easily moved between different hosts, clouds, or operating systems.

4 - Deployment: Virtual machines are typically deployed as self-contained units with their own operating system and applications. Docker containers are deployed as images, which can be shared and versioned, making them more flexible and easier to manage.

5 - Maintenance: Virtual machines require more maintenance than Docker containers, as they need to be patched, updated, and managed like any other operating system. Docker containers can be easily updated or replaced with newer versions without having to update the entire operating system.

Overall, the main difference between virtual machines and Docker is that virtual machines provide full isolation and require more resources, while Docker provides lighter isolation and requires fewer resources. Docker also provides greater portability and easier management, making it a popular choice for modern application development and deployment.

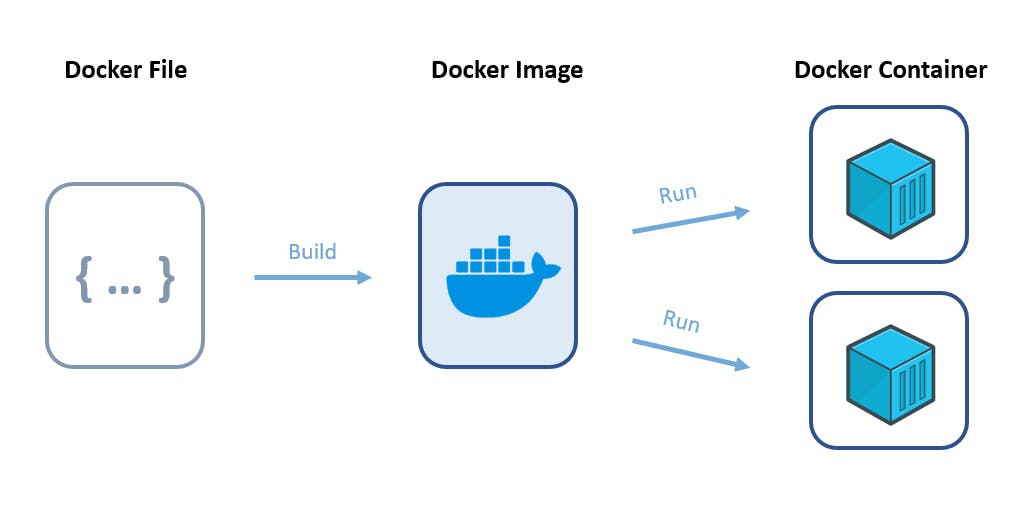

Images and Containers

An image is a lightweight, standalone, and executable package that includes everything needed to run an application, such as the code, dependencies, and configuration. Think of it as a snapshot of an application's environment at a specific point in time. Docker images are built from a Dockerfile, which contains instructions for how to build the image.

A container is a runtime instance of an image, which is isolated from the host system and other containers on the same system. Each container has its own file system, networking, and process space, making it possible to run multiple applications in isolation on the same host. Containers are started from images using the docker run command, and they can be managed and monitored using the Docker CLI or API.

Reasons why we should use Dockerfile

A Dockerfile is a script that contains a set of instructions for building a Docker image. Here are some reasons why you should use Dockerfile:

Reproducibility: By using a Dockerfile, you can define the exact environment that your application needs to run. This makes it easy to reproduce the same environment across different machines and platforms, which can help reduce bugs and ensure consistency.

Version control: Dockerfiles can be stored in version control systems like Git, which allows you to track changes to the environment and roll back to previous versions if necessary.

Automation: Dockerfiles can be automated using Continuous Integration and Continuous Deployment (CI/CD) tools, such as Jenkins or Travis CI. This makes it easy to build, test, and deploy Docker images in a repeatable and scalable way.

Modularity: Dockerfiles allow you to break down your application into smaller, reusable components, which can be shared and combined with other Docker images to create more complex applications.

Security: Dockerfiles enable you to define and isolate the specific software and dependencies that your application needs, reducing the attack surface and improving security.

Commands in Dockerfile

There are many commands available in Dockerfile that can be used to build a Docker image. Here are some of the most commonly used commands:

FROM: Specifies the base image that will be used to build the new image.

RUN: Executes a command inside the container during the build process. For example, installing dependencies or updating the system.

COPY: Copies files from the host machine to the container.

ADD: Copies files from the host machine to the container, and can also extract tar files or download files from a URL.

ENV: Sets environment variables inside the container.

WORKDIR: Specifies the working directory inside the container.

CMD: Specifies the command to be run when the container is started. This can be overridden when the container is run.

ENTRYPOINT: Specifies the command to be run when the container is started, and cannot be overridden when the container is run.

EXPOSE: Exposes a port inside the container.

VOLUME: Creates a mount point for a directory or a file inside the container.

These are just a few of the commands available in a Dockerfile. For a full list, please refer to the Docker documentation.

Dockerize Project

The explanation is enough. Let's explain in a practical way.

Here's an example of how you can dockerize a simple FastAPI project:

cd ~/Desktop

mkdir simplefastapi && cd simplefastapi

touch Dockerfile requirements.txt main.py

cd ~/Desktop: This command changes the current working directory to the Desktop directory in the user's home directory.mkdir simplefastapi && cd simplefastapi: This command creates a new directory calledsimplefastapion the Desktop and changes the current working directory to it using the&&operator. This is a shorthand way of executing two commands on a single line.touch Dockerfile main.py requirements.txt: This command creates three new empty files in thesimplefastapidirectory calledDockerfile main.py requirements.txt

open the main.py file and add the following contents:

from fastapi import FastAPI

app= FastAPI()

@app.get("/")

async def index():

return {"response":"Hello World"}

Add any dependencies needed for your project in requirements.txt For example:

fastapi

uvicorn

Now open the Dockerfile and add the following contents:

FROM python:latest

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "80"]

Here's a breakdown of the commands in the Dockerfile:

FROM python:latest: This specifies the base image to use for building the Docker image. In this case, it uses the latest version of the official Python Docker image as the base image.WORKDIR /app: This sets the working directory for the rest of the commands in the Dockerfile to/app.COPY requirements.txt .: This copies therequirements.txtfile from the host machine (the directory where the Docker build command is executed) to the/appdirectory inside the Docker image.RUN pip install -r requirements.txt: This installs the Python dependencies listed inrequirements.txtinside the Docker image.COPY . .: This copies all the files from the host machine to the/appdirectory inside the Docker image.CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "80"]: This specifies the command to run when the container starts. In this case, it runs theuvicorncommand to start the Uvicorn web server with themain:appargument, which specifies the name of the Python file and the FastAPI app object, and sets the host and port to0.0.0.0:80.

Overall, this Dockerfile sets up an environment to run a Python FastAPI application with the required dependencies installed, and starts a web server to serve the application on port 80 inside the Docker container.

Build And Run Docker Image

Now build the Docker image using the docker build command:

docker build -t my_fastapi_project .

This will build the Docker image and tag it with the name my_fastapi_project

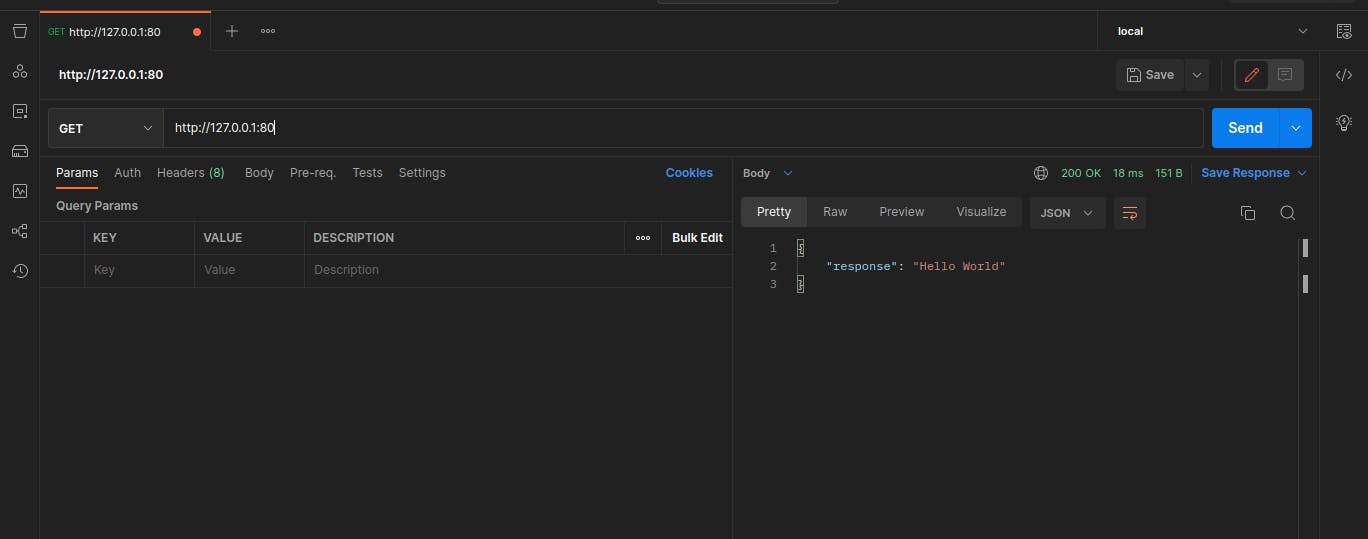

Once the image is built, you can run the container with the following command:

docker run -p 80:80 my_fastapi_project

This will start the container and map port 80 on the host machine to port 80 inside the container. You should now be able to access your FastAPI app by going to http://127.0.0.1 in your web browser.

Thank you !